A data pipeline is a series of processes that extract raw data from various sources, transform it into a usable format, and load it into a destination system, such as a data warehouse, analytics tool, or another storage system. Data pipelines are critical in modern data engineering for ensuring seamless, scalable, and reliable data flow.

Data Engineering

Step 2: Data Engineering (ETL Process)

Data Engineering is the discipline responsible for designing, building, and managing the systems that collect, store, and analyze data. It ensures that data is readily available, high-quality, and usable for business intelligence and analytics. At the core of data engineering lies the ETL process, which stands for Extract, Transform, and Load.

What is Data Engineering?

Data engineering involves several key responsibilities, including:

- Data Architecture: Designing scalable architectures that can efficiently handle large volumes of data and support data analytics and business intelligence.

- Data Integration: Combining data from various disparate sources into a cohesive and comprehensive dataset, often for analytical purposes.

- Data Warehousing: Creating and maintaining data warehouses, which are centralized repositories of integrated data from one or more sources.

- Data Quality Management: Implementing rigorous processes to ensure that data is accurate, complete, and reliable for analysis.

The Importance of Data Engineering

Data engineering plays a vital role in today’s data-driven landscape. Here are several reasons why it is essential:

- Enabling Analytics: Well-engineered data pipelines allow organizations to perform complex analyses, leading to actionable insights that can drive business strategy.

- Improving Data Quality: Data engineers ensure that data is cleansed and validated, significantly reducing errors in reporting and analytics.

- Facilitating Decision-Making: Reliable data empowers business leaders to make informed decisions based on accurate and timely information.

- Scalability: As data volumes grow, scalable data engineering solutions can accommodate increased loads without sacrificing performance.

- Integration of Diverse Data Sources: Data engineering enables the integration of structured and unstructured data from multiple sources, allowing for comprehensive analyses that can reveal new insights.

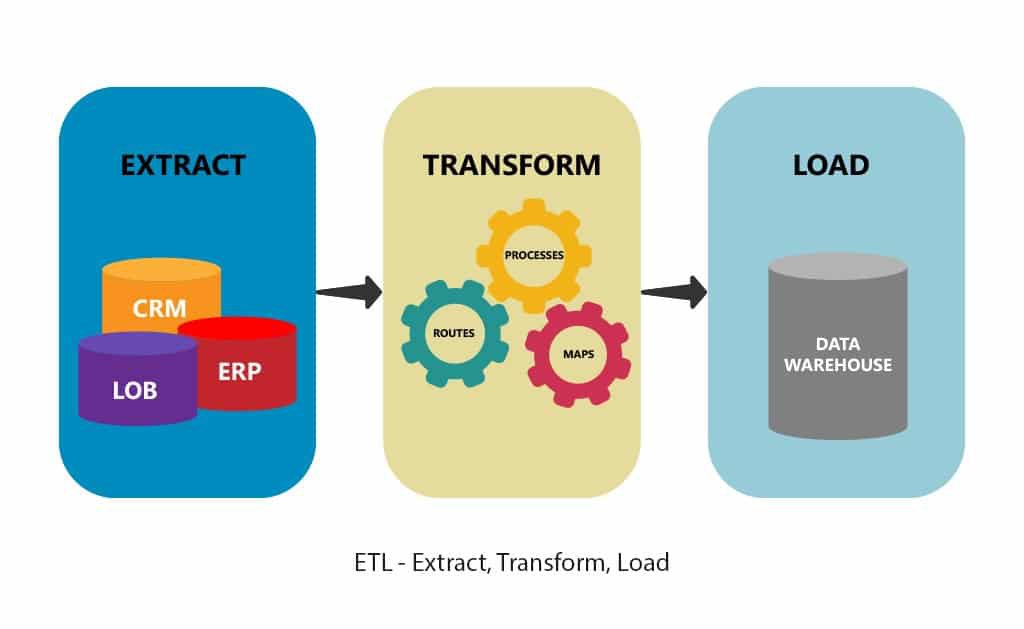

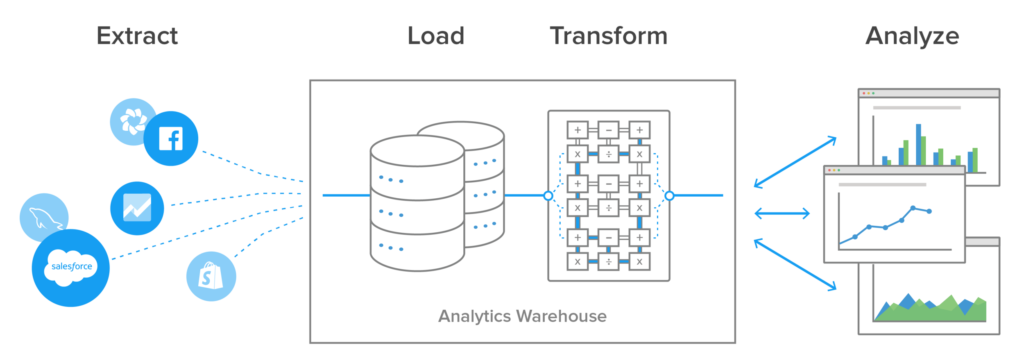

What is ETL?

ETL (Extract, Transform, Load) is a systematic approach to collecting and preparing data for analysis. Each of its three components plays a crucial role in the data pipeline:

1. Extract

In this phase, data is gathered from various sources, including:

- Databases: This includes relational databases (like MySQL, PostgreSQL) and NoSQL databases (like MongoDB).

- APIs: RESTful APIs provide access to real-time data streams.

- Flat Files: Data can come from CSV, JSON, XML, and other flat-file formats.

- Web Scraping: This technique is used to collect data from websites that do not provide APIs.

Example: A retail company may extract sales data from its point-of-sale system, customer data from its Customer Relationship Management (CRM) system, and inventory data from its inventory management system.

2. Transform

Once data is extracted, it undergoes a transformation process that can include:

- Cleansing: Removing duplicates, correcting errors, and filling in missing values to ensure accuracy.

- Normalization: Converting data into a consistent format or structure to enable easy analysis.

- Aggregation: Summarizing data (e.g., calculating total sales per month) to create meaningful reports.

- Enrichment: Combining data with additional contextual information (e.g., adding geographic data to customer records for enhanced analysis).

Example: The retail company might normalize customer names to ensure consistency across systems and aggregate sales data by month for reporting and trend analysis.

3. Load

After transformation, the data is loaded into a target system, typically a data warehouse or another database. The loading process can be:

- Full Load: All data is loaded into the warehouse, replacing the existing data.

- Incremental Load: Only the data that has changed since the last load is updated in the target system.

Example: The transformed sales, customer, and inventory data are loaded into a data warehouse, where analysts can access it easily for reporting and deriving insights.

The ETL Process in Action

Implementing an effective ETL process involves several critical steps:

- Data Discovery: Identify data sources and understand their structures and formats. This initial step helps in planning the ETL pipeline.

- Data Mapping: Define how data from the sources will be transformed and where it will be stored in the target system. This includes specifying field mappings and transformation logic.

- Automation: Utilize ETL tools (e.g., Apache NiFi, Talend, Informatica, or AWS Glue) to automate the extraction, transformation, and loading processes, ensuring efficiency and reducing manual intervention.

- Monitoring and Maintenance: Continuous monitoring of the ETL processes is essential to maintain data quality and performance. Establish logging mechanisms to track errors and data quality issues.

Best Practices for ETL Implementation

- Use of ETL Tools: Leverage specialized ETL tools that provide built-in functionalities for data extraction, transformation, and loading, which can simplify the process and reduce development time.

- Incremental Loads: Instead of full loads, implement incremental loads where possible to save time and resources. This is particularly important for large datasets.

- Data Validation: Incorporate validation checks during the ETL process to ensure data integrity and accuracy before loading it into the warehouse.

- Performance Tuning: Regularly optimize ETL processes to improve performance and reduce processing time, especially as data volumes increase.

Challenges in Data Engineering and ETL

Despite its importance, data engineering and the ETL process face several challenges:

- Data Quality Issues: Inconsistent or inaccurate data can lead to erroneous insights, making data quality management a critical aspect of ETL.

- Complexity of Data Sources: Integrating data from diverse sources can be complicated, especially when dealing with different formats and structures.

- Scalability: As data volume grows, maintaining performance and efficiency in ETL processes can become a significant challenge.

- Data Governance and Compliance: Ensuring compliance with data privacy regulations (such as GDPR or CCPA) is critical, requiring data engineers to implement appropriate governance measures.

Future Trends in Data Engineering and ETL

As technology evolves, so do the practices and tools used in data engineering and ETL. Some emerging trends include:

- Real-time Data Processing: The demand for real-time analytics is growing, leading to the adoption of streaming ETL processes that enable immediate data processing and analysis.

- Data Lakes vs. Data Warehouses: Organizations are increasingly leveraging data lakes to store unstructured and semi-structured data, allowing for more flexibility in data storage and analysis.

- Machine Learning Integration: Incorporating machine learning algorithms into the ETL process can enhance data transformation, automate data cleansing, and provide predictive analytics capabilities.

- Cloud-based Solutions: Cloud platforms are becoming more popular for data engineering, offering scalable and cost-effective solutions for data storage and processing.

Conclusion

Data engineering, particularly the ETL process, is vital for modern organizations that seek to harness the power of their data. By effectively extracting, transforming, and loading data, businesses can gain valuable insights, make informed decisions, and remain competitive in today’s data-driven world.

Stay tuned for our next discussion, where we will explore the subsequent stages of the data pipeline and their significance in data analytics!